EE01 • The AI Perspective Most Founders and Investors Miss

Altman and Ive’s $6.5B bet. Decoded, challenged and tied to the mindset shift no one’s talking about.

What a week.

NVIDIA, OpenAI, Google I/O, Claude 4, Apple, Microsoft… Everyone’s making waves. But let’s slow down.

It’s easy to ride the hype train. It’s harder to pause and ask: what’s actually shaping this wave?

This issue zooms out to uncover what’s really moving the needle, not just lighting up the stage.

And no, it’s not just another demo dressed up in sci-fi.

Shocking, I know.

The Essentials:

Altman & Ive's $6.5B bet isn't about hardware.

It’s about shaping how we interact with intelligence and how we organize around it. Hardware is just the delivery system.

The real edge isn't model quality. It's interface monopoly.

Whoever defines the defaults wins the game. I hear you: "Interface is nice, but you need users first." Fair. OpenAI didn't win on taste alone, they had the first viral distribution flywheel. While others had competitive models, OpenAI made it usable, then inevitable.

AI-native organizations think and act differently.

They won't look like SaaS companies. They'll evolve like neural networks. Fluid, adaptive, learning.

Three aspects separate companies built with AI from companies using AI: structure, workflow and adaptation speed.

Most teams are fighting the last war.

Still bolting AI onto 2019 workflows. Winners build companies with AI, not just on top of it.

The Horseless Carriage Trap

You can't redefine the future by duct-taping LLMs to yesterday's UX.

Felt computing beats functional computing. Products that feel inevitable create switching costs that pure functionality can't match.

Users aren't endpoints anymore.

They're co-designing your product in real-time through every interaction. While most founders are building for today's expectations, OpenAI is rewriting tomorrow's defaults.

“The best way to take care of the future is to take care of the present moment."

— Thich Nhat Hanh

01. The Infrastructure Is Ready. Are We?

Today, most people are asking what AI can build. The better question? What kind of organizations can think with AI?

Unlike any wave before it, AI adoption has no distribution problem. The infrastructure is here. We have everything we need. Compute, networks, data, and most importantly talent. Globally. (Anyone still arguing otherwise probably thinks prompt engineering is a fad, too.)

What separates builders now isn’t access. It’s taste and conviction. And taste isn't a moodboard. It's earned:

by asking sharper questions.

by building through ambiguity.

by combining technical intuition with behavioral insight.

This wave is different.

On November 30, 2022, the world saw ChatGPT and AI stopped being a science fair project. People started believing. (Before that, ML teams were the lab’s magicians: great at demos, but no one bet the company on them.)

Jensen Huang declared,

“Every single pixel will be generated, not rendered.”

In this generative era, company-building itself may become the work of agents.

Organizations could start to behave less like hierarchies—and more like neural networks. Fluid. Embedded. Continuously learning alongside humans. This shift demands entirely new protocols for identity, communication, and trust. Just like the internet once did.

The age of app-centric thinking is fading. We're entering a world of system-level, data-native, intelligence-first design.

So let’s take a step back and unpack what that really means.

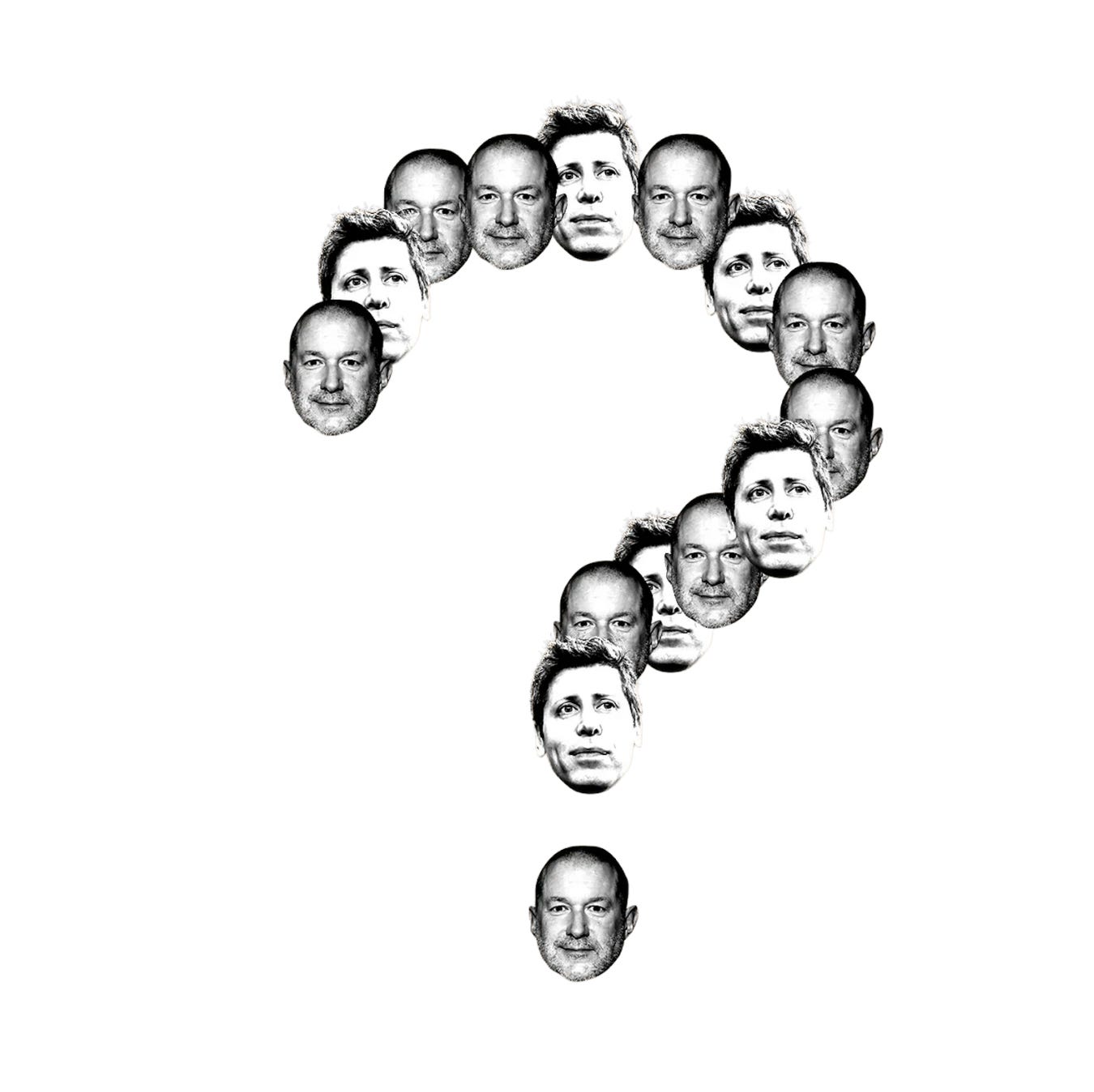

1.1. This Isn’t About a Device

The real bet behind the $6.5B move is neither a device nor a typical product launch. Altman’s vision with Ive marks the end of functional computing and the rise of felt computing.

Ive isn’t designing a device. He’s designing an organizational form that can continuously reshape human–AI interaction. Most AI startups are fighting the last war, while a new one quietly redefines how humans expect to coexist with machines.

This collaboration signals the death of the old model: engineers build, designers polish. The new frontier demands products that don’t just work, they feel inevitable.

OpenAI doesn’t want to compete. It wants to reinvent, redefine, and own the interaction.

02. AI Isn’t the Product, It’s the Company

We’re entering a new era, one that redefines how we work, how companies run, and how value gets created. When that’s the case, you don’t lean on a 90s way of operating. You design your company for the intelligence age. Like a neural net: adaptive, layered, always learning. Where agents talk to each other, collaborate with humans, and make decisions in motion.

This isn’t just a new interface. It’s a new kind of intelligence, a new kind of economy, and a new kind of AI-native company DNA. Exactly what Ive is doing. He’s designing company form that can continuously reshape human-AI interaction patterns.

One that levels up continuously. One that demands a stochastic mindset, where outcomes aren’t engineered, they emerge.

Deterministic outputs will give way to context-driven, dynamic results where our minds adapt alongside self-improving models.

That’s the real play behind the OpenAI–Ive collaboration. It’s not about hardware. It’s about building AI-native teams that can out-innovate even Apple. But their target isn’t Apple. Or Google. They want to lead the way to AGI without dragging along web-era baggage.

2.1. The Org Design Test

Three questions to escape the wrapper trap:

Structure: Are you hiring for AI-native roles or retrofitting AI into traditional ones?

Example: Adept vs. IBM

Adept is designing roles around AI-native workflows—prompt engineers, model behavior testers, and environment trainers.

IBM, by contrast, often retrofits AI into legacy job structures (e.g. data scientists under IT, not product). The result? Slower integration, less agility.

Workflow: Does your org assume humans lead and AI assists or can AI reshape how decisions get made?

Example: Runway vs. Adobe

Runway bakes AI into the core creative workflow. Users co-create with models in real time.

Adobe, while integrating generative features, still positions AI as a “tool” in the traditional sense. An assistant, not a collaborator.

Evolution: Can your company structure evolve as fast as your AI stack?

Example: OpenAI vs. Google

OpenAI reorganizes itself constantly—launching new teams, moonshot projects, even partnerships (like with Jony Ive) when needed.

Google has incredible AI capability—but internal org friction (two Gemini APIs, competing teams) slows execution. The tech is ready. The org isn’t.

These examples show: It's not just about using AI. It's about building a company that thinks with it. Structurally, culturally, operationally. But even AI-native organizations need one more advantage to stay ahead: interface control.

Here's where the OpenAI-Jony Ive story gets really interesting.

03. The Real Product Isn't Hardware. It's How We’ll Get Rewired

Hardware is just the delivery system. The real product is the interaction paradigm itself.

We're not designing screens anymore.

We're designing systems that feel like magic.

3.1. The Surface Story

OpenAI + Apple's former design chief = they're building an AI device to compete with smartphones.

3.2. The Real Story

They're trying to establish how humans expect to interact with AI before anyone else can.

3.3. Here's the Deeper Pattern

Interface Monopoly = Interaction Paradigm Control

3.4. Who Defines the Default, Defines the Game

Everyone thinks this is about building the iPhone of AI.

They’re wrong.

This isn’t about a device. It’s about locking in how we expect to interact with intelligence, not just how we use it. The real battle isn’t hardware vs. software.

It’s about who controls the interaction paradigm.

Apple didn’t just build better phones. Nope. They redefined how we think about computing in our hands. They taught the world what “intuitive” feels like. Now every screen pinch, swipe, and tap lives inside the mental model they created.

That’s interface monopoly 101.

And it’s far stickier than hardware. OpenAI is playing the same game with intelligence. They’re trying to define:

How AI responds to human intent

What “natural” interaction with intelligence feels like

How intelligence fits into our workflows, not just our apps

3.5. Because Interface Control is Existential Control

If OpenAI succeeds, every other AI company will be forced to compete within their interaction paradigm. Even if competitors have better models or cheaper prices, users will expect AI to work "the OpenAI way."

Once users get used to a certain feel, they won’t unlearn it.

Ask Google how Gemini is doing inside OpenAI-shaped expectations. This is why Google struggles with Gemini despite having great technology. They’re still competing inside interaction patterns OpenAI already defined (chat-based, intent-first, emotionally responsive).

This isn’t about market share or feature sets. It’s about proving that felt computing beats functional computing.

The Altman and Ive collaboration marks the end of the old model: Engineers build, designers polish.

That era’s over.

3.6. The Real Battle:

It's not "Will their device succeed?"

It's "Can they lock in how AI should feel and behave?"

If they can do that, they win even if the hardware flops. Because every other AI product will be measured against the interaction standard they set. Make sense?

The hardware is just the delivery mechanism. The real product is the interaction paradigm itself.

Again, this isn't about building another gadget. Or capturing the market. It’s about overriding every constraint to define what intelligence should feel like.

Every major computing platform needed its own interface language—

CLI → GUI → Touch → ?

So ask yourself:

Why are we still shoving AI into yesterday’s UI?

Still designing AI like it needs buttons and dropdowns?

Cute.

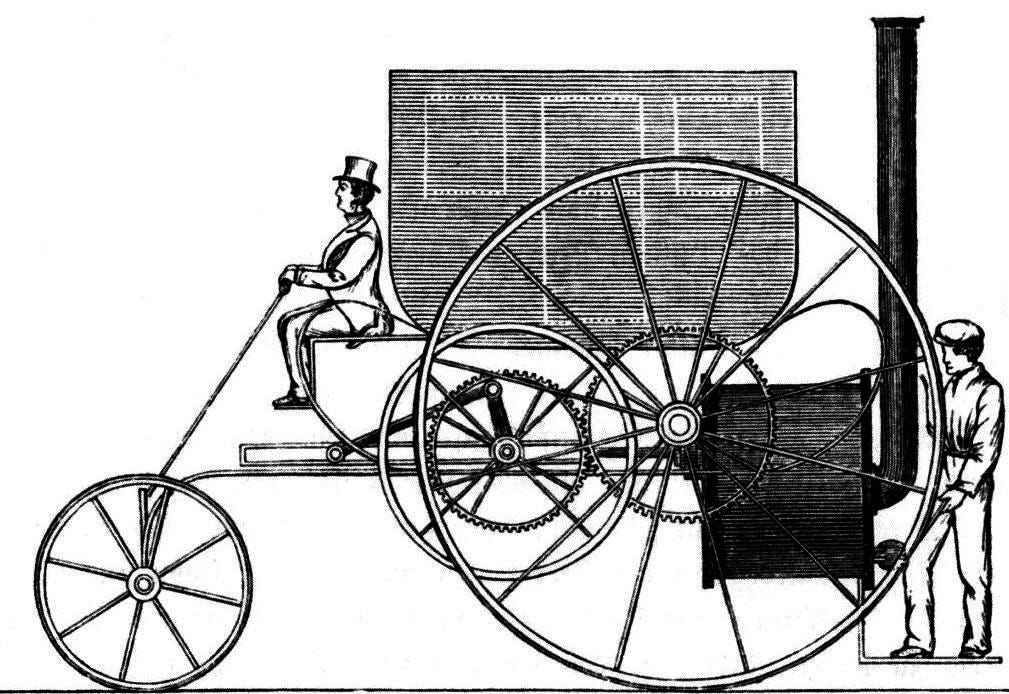

That’s your horseless carriage moment.

3.7. Users Are Now Co-Designers

The interface isn’t just something you build for users. It’s something users now build with you.

Traditional software had clear boundaries: you ship features, users consume them. AI products blur these boundaries. Every conversation trains the model. Every correction shapes behavior.

LLMs learn from usage. Prompts are interface. Fine-tuning happens in the wild. The biggest shift? Your product is no longer shaped solely by your team. It’s shaped by behavior at scale, in real time.

Design for interaction and the interaction evolves. Design for intent and the user becomes a system collaborator.

The best AI-native products won’t just anticipate intent. They’ll learn what “good” feels like by watching us use them.

That’s a fundamental shift from the SaaS/Web2 mindset, where:

Old (Human-driven iteration): Launch → Analyze → Optimize

users = endpoints

product = fixed experience

feedback = post-hoc

New (System-driven evolution): Launch → Adapt (real-time)→ Evolve

users = co-designers

product = evolving system

feedback = real-time training

Smart teams are designing for this collaborative reality. The interface becomes conversational, not transactional.

04. The Horseless Carriage Trap

Pete Koomen wrote an excellent piece on how most AI products today still mimic the past, same old interfaces, now with LLMs duct-taped on top. Instead of rethinking interaction from first principles, we’re busy adding autocomplete to dashboards and calling it the future.

In my view, we’re not failing to invent new interfaces. We’re failing to admit the old ones won’t survive. And still expecting users to call that “magical.”

Zoom out, and the pattern gets worse.

We're still thinking "AI + existing interface" instead of "intelligence as interface."

Same with org design: what we mostly see is “AI + existing workflow” instead of “AI as organizational DNA.”

Most startups are hiring like it’s still 2019 then bolting AI on top. It’s the organizational wrapper problem. But the real unlock? Companies that think with AI, not just use it.

Because when you think with AI, you stop asking how to fit it in and start asking:

What if the winning interface looks nothing like our current assumptions?

Ever thought?

4.1. The Horseless Carriage Test

Three questions to escape conceptual lock-in:

Are you building AI tools or reimagining the underlying workflow?

If AI just speeds up what already exists, you're not transforming anything. You're optimizing legacy.Example: Notion vs. Salesforce

Notion is using AI to collapse search, writing, and synthesis into a single intent-driven workflow. It’s not just adding a chatbot to documents—it’s questioning what a document even is.

Salesforce, on the other hand, is layering AI onto CRM workflows—generating emails, auto-filling data. Helpful, but the workflow is still fundamentally the same.

What assumptions about “interface” are you still carrying from the previous era?

Are you defaulting to screens, clicks, dashboards, and forms—just because that's what you're used to?Example: Rabbit OS vs. Microsoft Copilot

Rabbit OS questions whether apps even need to exist—introducing a universal agent UI that moves across contexts.

Microsoft Copilot sticks to adding smart features inside Office and Teams. Powerful, but deeply nested in old mental models (menus, files, apps).

If you removed every familiar UI element—what would users actually need?

Think beyond inputs and outputs. What does it mean to design for intent, not interaction?Example: Humane AI Pin vs. Meta AI in WhatsApp

Humane’s AI Pin is trying to replace screens with presence- and intent-based interaction. It’s clunky today, but bold.

Meta AI in WhatsApp shows up inside your chat—with no clear intent model, limited usefulness, and a weird “why is this here?” vibe. A UI transplant, not an interface rethink.

05. Where the Edge Actually Is

While the world debates AI capabilities, the real edge belongs to those designing companies, not just products for the intelligence age. To sum it up, I am seeing four intelligence-native shifts:

Functional computing → Felt computing

Static team hierarchies → Neural team networks

Command interfaces → Intent interfaces

AI as add-on → AI as native DNA

In the age of infinite tools, the real mystery isn't what you can build but what's worth building.

And more importantly: What will still matter when intelligence becomes ambient, when GPT-5 is table stakes and every workflow gets automated by default?

06. One Prompt, One Truth

Are you building a product people choose or the one they default to because it feels inevitable?

End of Line.

{ Next release loading... }

Stay essential,

Nihal

P.S. If this made something click or question what you’re building, I want to hear from you.

Or forward it to someone still designing for 2019. They'll catch up, eventually.

P.P.S. Find Pete Koomen's full essay here → AI Horseless Carriages